Introduction to Linux

A Hands on Guide

Machtelt Garrels

1.27 Edition

Copyright © 2002, 2003, 2004, 2005, 2006, 2007, 2008 Machtelt Garrels

20080606

- Table of Contents

- Introduction

- 1. What is Linux?

- 1.1. History

- 1.2. The user interface

- 1.3. Does Linux have a future?

- 1.4. Properties of Linux

- 1.5. Linux Flavors

- 1.6. Summary

- 1.7. Exercises

- 2. Quickstart

- 2.1. Logging in, activating the user interface and logging out

- 2.2. Absolute basics

- 2.3. Getting help

- 2.4. Summary

- 2.5. Exercises

- 3. About files and the file system

- 3.1. General overview of the Linux file system

- 3.2. Orientation in the file system

- 3.3. Manipulating files

- 3.4. File security

- 3.5. Summary

- 3.6. Exercises

- 4. Processes

- 4.1. Processes inside out

- 4.2. Boot process, Init and shutdown

- 4.3. Managing processes

- 4.4. Scheduling processes

- 4.5. Summary

- 4.6. Exercises

- 5. I/O redirection

- 5.1. Simple redirections

- 5.2. Advanced redirection features

- 5.3. Filters

- 5.4. Summary

- 5.5. Exercises

- 6. Text editors

- 6.1. Text editors

- 6.2. Using the Vim editor

- 6.3. Linux in the office

- 6.4. Summary

- 6.5. Exercises

- 7. Home sweet /home

- 7.1. General good housekeeping

- 7.2. Your text environment

- 7.3. The graphical environment

- 7.4. Region specific settings

- 7.5. Installing new software

- 7.6. Summary

- 7.7. Exercises

- 8. Printers and printing

- 8.1. Printing files

- 8.2. The server side

- 8.3. Print problems

- 8.4. Summary

- 8.5. Exercises

- 9. Fundamental Backup Techniques

- 9.1. Introduction

- 9.2. Moving your data to a backup device

- 9.3. Using rsync

- 9.4. Encryption

- 9.5. Summary

- 9.6. Exercises

- 10. Networking

- 10.1. Networking Overview

- 10.2. Network configuration and information

- 10.3. Internet/Intranet applications

- 10.4. Remote execution of applications

- 10.5. Security

- 10.6. Summary

- 10.7. Exercises

- 11. Sound and Video

- 11.1. Audio Basics

- 11.2. Sound and video playing

- 11.3. Video playing, streams and television watching

- 11.4. Internet Telephony

- 11.5. Summary

- 11.6. Exercises

- A. Where to go from here?

- A.1. Useful Books

- A.2. Useful sites

- B. DOS versus Linux commands

- C. Shell Features

- C.1. Common features

- C.2. Differing features

- Glossary

- Index

- List of Tables

- 1. Typographic and usage conventions

- 2-1. Quickstart commands

- 2-2. Key combinations in Bash

- 2-3. New commands in chapter 2: Basics

- 3-1. File types in a long list

- 3-2. Subdirectories of the root directory

- 3-3. Most common configuration files

- 3-4. Common devices

- 3-5. Color-ls default color scheme

- 3-6. Default suffix scheme for ls

- 3-7. Access mode codes

- 3-8. User group codes

- 3-9. File protection with chmod

- 3-10. New commands in chapter 3: Files and the file system

- 3-11. File permissions

- 4-1. Controlling processes

- 4-2. Common signals

- 4-3. New commands in chapter 4: Processes

- 5-1. New commands in chapter 5: I/O redirection

- 7-1. Common environment variables

- 7-2. New commands in chapter 7: Making yourself at home

- 8-1. New commands in chapter 8: Printing

- 9-1. New commands in chapter 9: Backup

- 10-1. The simplified OSI Model

- 10-2. New commands in chapter 10: Networking

- 11-1. New commands in chapter 11: Audio

- B-1. Overview of DOS/Linux commands

- C-1. Common Shell Features

- C-2. Differing Shell Features

- List of Figures

- 1. Introduction to Linux front cover

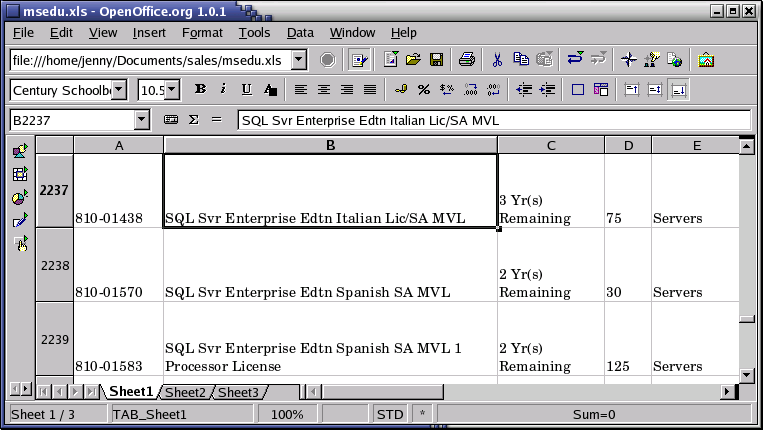

- 1-1. OpenOffice MS-compatible Spreadsheet

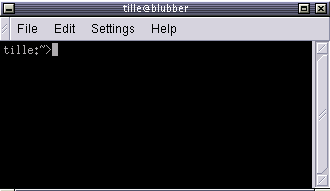

- 2-1. Terminal window

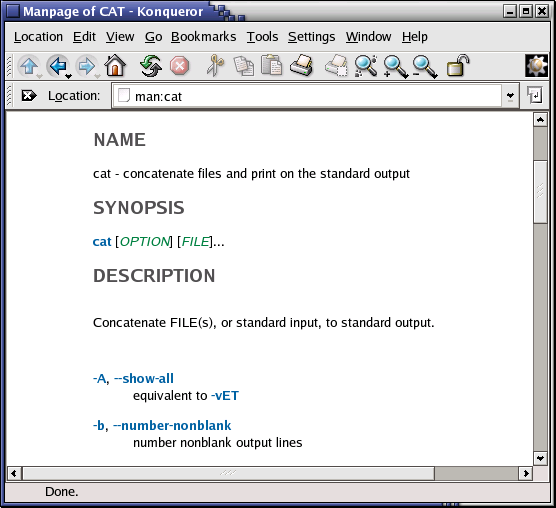

- 2-2. Konqueror as help browser

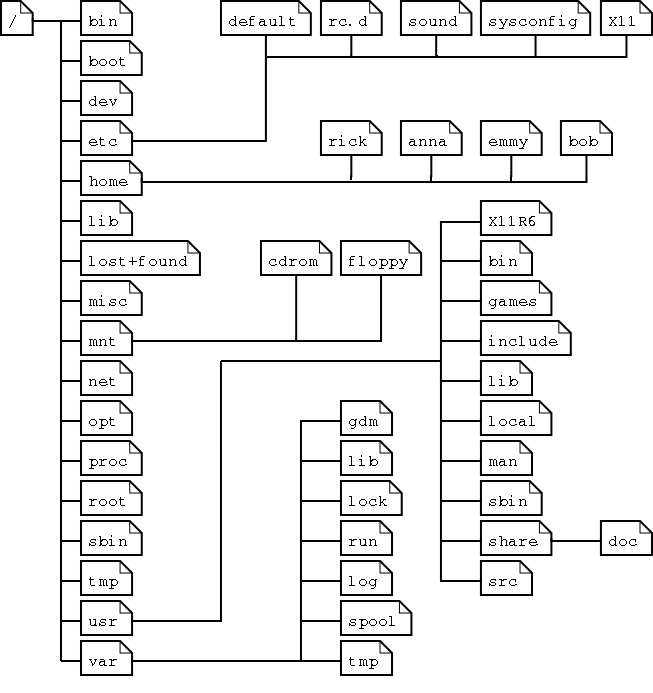

- 3-1. Linux file system layout

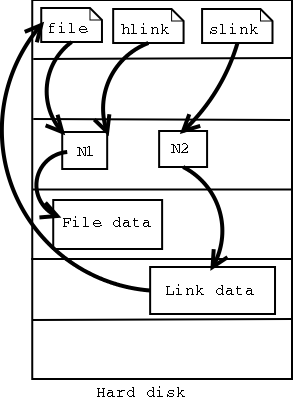

- 3-2. Hard and soft link mechanism

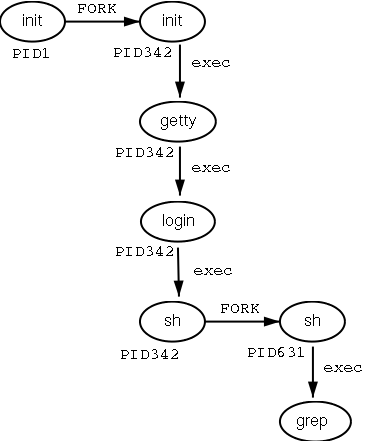

- 4-1. Fork-and-exec mechanism

- 4-2. Can't you go faster?

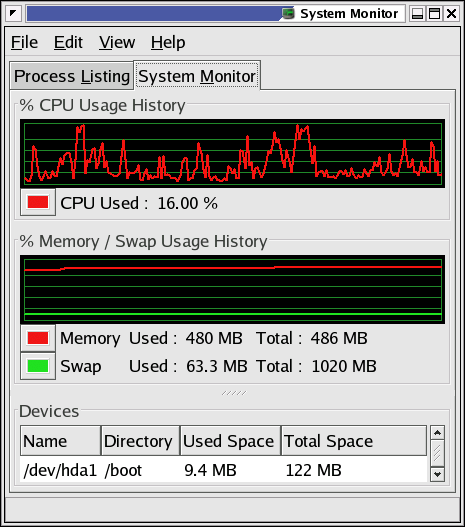

- 4-3. Gnome System Monitor

- 8-1. Printer Status through web interface

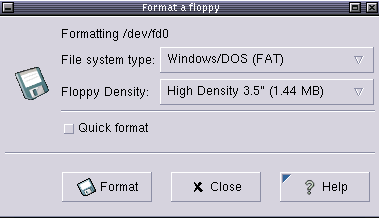

- 9-1. Floppy formatter

- 10-1. Evolution mail and news reader

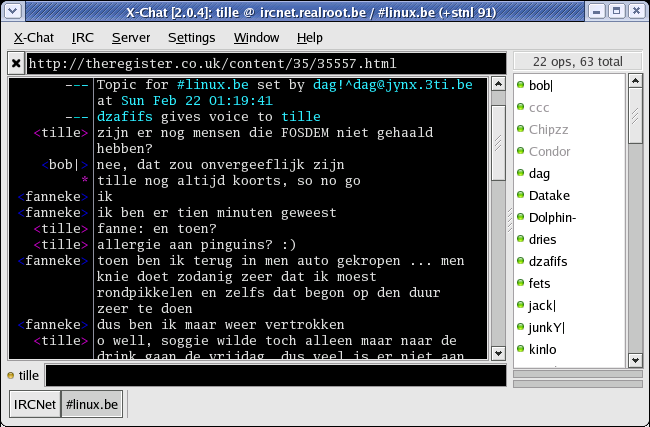

- 10-2. X-Chat

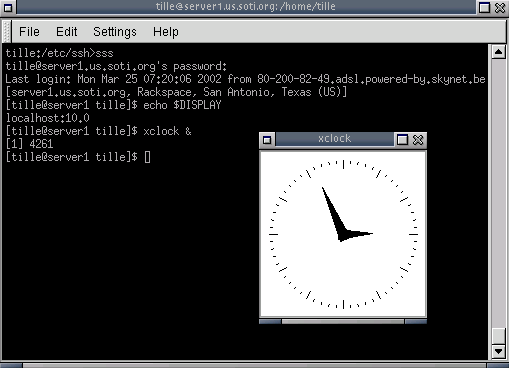

- 10-3. SSH X11 forwarding

- 11-1. XMMS mp3 player

Introduction

1. Why this guide?

Many people still believe that learning Linux is difficult, or that only experts can understand how a Linux system works. Though there is a lot of free documentation available, the documentation is widely scattered on the Web, and often confusing, since it is usually oriented toward experienced UNIX or Linux users. Today, thanks to the advancements in development, Linux has grown in popularity both at home and at work. The goal of this guide is to show people of all ages that Linux can be simple and fun, and used for all kinds of purposes.

2. Who should read this book?

This guide was created as an overview of the Linux Operating System, geared toward new users as an exploration tour and getting started guide, with exercises at the end of each chapter. For more advanced trainees it can be a desktop reference, and a collection of the base knowledge needed to proceed with system and network administration. This book contains many real life examples derived from the author's experience as a Linux system and network administrator, trainer and consultant. We hope these examples will help you to get a better understanding of the Linux system and that you feel encouraged to try out things on your own.

Everybody who wants to get a "CLUE", a Command Line User Experience, with Linux (and UNIX in general) will find this book useful.

3. New versions and availability

This document is published in the Guides section of the Linux Documentation Project collection at http://www.tldp.org/guides.html; you can also download PDF and PostScript formatted versions here.

The most recent edition is available at http://tille.garrels.be/training/tldp/.

The second edition of this guide is available in print from Fultus.com Books as paperback Print On Demand (POD) book. Fultus distributes this document through Ingram and Baker & Taylor to many bookstores, including Amazon.com, Amazon.co.uk, BarnesAndNoble.com and Google's Froogle global shopping portal and Google Book Search.

The guide has been translated into Hindi by:

Alok Kumar

Dhananjay Sharma

Kapil

Puneet Goel

Ravikant Yuyutsu

Andrea Montagner translated the guide into Italian.

4. Revision History

| Revision History | ||

|---|---|---|

| Revision 1.27 | 20080606 | Revised by: MG |

| updates. | ||

| Revision 1.26 | 20070919 | Revised by: MG |

| Comments from readers, license. | ||

| Revision 1.25 | 20070511 | Revised by: MG |

| Comments from readers, minor updates, E-mail etiquette, updated info about availability (thanks Oleg). | ||

| Revision 1.24 | 2006-11-01 | Revised by: MG |

| added index terms, prepared for second printed edition, added gpg and proxy info. | ||

| Revision 1.23 | 2006-07-25 | Revised by: MG and FK |

| Updates and corrections, removed app5 again, adapted license to enable inclusion in Debian docs. | ||

| Revision 1.22 | 2006-04-06 | Revised by: MG |

| chap8 revised completely, chap10: clarified examples, added ifconfig and cygwin info, revised network apps. | ||

| Revision 1.21 | 2006-03-14 | Revised by: MG |

| Added exercises in chap11, corrected newline errors, command overview completed for chapter 9, minor corrections in chap10. | ||

| Revision 1.20 | 2006-01-06 | Revised by: MG |

| Split chap7: audio stuff is now in separate chapter, chap11.xml. Small revisions, updates for commands like aptitude, more on USB storage, Internet telephony, corrections from readers. | ||

| Revision 1.13 | 2004-04-27 | Revised by: MG |

| Last read-through before sending everything to Fultus for printout. Added Fultus referrence in New Versions section, updated Conventions and Organization sections. Minor changes in chapters 4, 5, 6 and 8, added rdesktop info in chapter 10, updated glossary, replaced references to fileutils with coreutils, thankyou to Hindi translators. | ||

5. Contributions

Many thanks to all the people who shared their experiences. And especially to the Belgian Linux users for hearing me out every day and always being generous in their comments.

Also a special thought for Tabatha Marshall for doing a really thorough revision, spell check and styling, and to Eugene Crosser for spotting the errors that we two overlooked.

And thanks to all the readers who notified me about missing topics and who helped to pick out the last errors, unclear definitions and typos by going through the trouble of mailing me all their remarks. These are also the people who help me keep this guide up to date, like Filipus Klutiero who did a complete review in 2005 and 2006 and helps me getting the guide into the Debian docs collection, and Alexey Eremenko who sent me the foundation for chapter 11.

In 2006, Suresh Rajashekara created a Debian package of this documentation.

Finally, a big thank you for the volunteers who are currently translating this document in French, Swedish, German, Farsi, Hindi and more. It is a big work that should not be underestimated; I admire your courage.

6. Feedback

Missing information, missing links, missing characters? Mail it to the maintainer of this document:

<tille wants no spam _at_ garrels dot be>

Don't forget to check with the latest version first!

7. Copyright information

* Copyright (c) 2002-2007, Machtelt Garrels * All rights reserved. * Redistribution and use in source and binary forms, with or without * modification, are permitted provided that the following conditions are met: * * * Redistributions of source code must retain the above copyright * notice, this list of conditions and the following disclaimer. * * Redistributions in binary form must reproduce the above copyright * notice, this list of conditions and the following disclaimer in the * documentation and/or other materials provided with the distribution. * * Neither the name of the author, Machtelt Garrels, nor the * names of its contributors may be used to endorse or promote products * derived from this software without specific prior written permission. * * THIS SOFTWARE IS PROVIDED BY THE AUTHOR AND CONTRIBUTORS "AS IS" AND ANY * EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED * WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE * DISCLAIMED. IN NO EVENT SHALL THE AUTHOR AND CONTRIBUTORS BE LIABLE FOR ANY * DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES * (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; * LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND * ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT * (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS * SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE. |

The logos, trademarks and symbols used in this book are the properties of their respective owners.

8. What do you need?

You will require a computer and a medium containing a Linux distribution. Most of this guide applies to all Linux distributions - and UNIX in general. Apart from time, there are no further specific requirements.

The Installation HOWTO contains helpful information on how to obtain Linux software and install it on your computer. Hardware requirements and coexistence with other operating systems are also discussed.

CD images can be downloaded from linux-iso.com and many other locations, see Appendix A.

An interesting alternative for those who don't dare to take the step of an actual Linux installation on their machine are the Linux distributions that you can run from a CD, such as the Knoppix distribution.

9. Conventions used in this document

The following typographic and usage conventions occur in this text:

Table 1. Typographic and usage conventions

| Text type | Meaning | |

|---|---|---|

| "Quoted text" | Quotes from people, quoted computer output. | |

| Literal computer input and output captured from the terminal, usually rendered with a light grey background. | |

| command | Name of a command that can be entered on the command line. | |

| VARIABLE | Name of a variable or pointer to content of a variable, as in $VARNAME. | |

| option | Option to a command, as in "the -a option to the ls command". | |

| argument | Argument to a command, as in "read man ls ". | |

| prompt | User prompt, usually followed by a command that you type in a terminal window, like in hilda@home> ls -l | |

|

command options arguments | Command synopsis or general usage, on a separated line. | |

| filename | Name of a file or directory, for example "Change to the /usr/bin directory." | |

| Key | Keys to hit on the keyboard, such as "type Q to quit". | |

| Graphical button to click, like the button. | ||

| -> | Choice to select from a graphical menu, for instance: "Select-> in your browser." | |

| Terminology | Important term or concept: "The Linux kernel is the heart of the system." | |

| The backslash in a terminal view or command synopsis indicates an unfinished line. In other words, if you see a long command that is cut into multiple lines, \ means "Don't press Enter yet!" | |

| See Chapter 1 | link to related subject within this guide. | |

| The author | Clickable link to an external web resource. |

The following images are used:

| This is a note |

|---|---|

It contains additional information or remarks. |

| This is a caution |

|---|---|

It means be careful. |

| This is a warning |

|---|---|

Be very careful. |

| This is a tip |

|---|---|

Tips and tricks. |

10. Organization of this document

This guide is part of the Linux Documentation Project and aims to be the foundation for all other materials that you can get from the Project. As such, it provides you with the fundamental knowledge needed by anyone who wants to start working with a Linux system, while at the same time it tries to consciously avoid re-inventing the hot water. Thus, you can expect this book to be incomplete and full of links to sources of additional information on your system, on the Internet and in your system documentation.

The first chapter is an introduction to the subject on Linux; the next two discuss absolute basic commands. Chapters 4 and 5 discuss some more advanced but still basic topics. Chapter 6 is needed for continuing with the rest, since it discusses editing files, an ability you need to pass from Linux newbie to Linux user. The following chapters discuss somewhat more advanced topics that you will have to deal with in everyday Linux use.

All chapters come with exercises that will test your preparedness for the next chapter.

Chapter 1: What is Linux, how did it come into existence, advantages and disadvantages, what does the future hold for Linux, who should use it, installing your computer.

Chapter 2: Getting started, connecting to the system, basic commands, where to find help.

Chapter 3: The filesystem, important files and directories, managing files and directories, protecting your data.

Chapter 4: Understanding and managing processes, boot and shutdown procedures, postponing tasks, repetitive tasks.

Chapter 5: What are standard input, output and error and how are these features used from the command line.

Chapter 6: Why you should learn to work with an editor, discussion of the most common editors.

Chapter 7: Configuring your graphical, text and audio environment, settings for the non-native English speaking Linux user, tips for adding extra software.

Chapter 8: Converting files to a printable format, getting them out of the printer, hints for solving print problems.

Chapter 9: Preparing data to be backed up, discussion of various tools, remote backup.

Chapter 10: Overview of Linux networking tools and user applications, with a short discussion of the underlying service daemon programs and secure networking.

Chapter 11: Sound and video, including Voice over IP and sound recording is discussed in this chapter.

Appendix A: Which books to read and sites to visit when you have finished reading this one.

Appendix B: A comparison.

Appendix C: If you ever get stuck, these tables might be an outcome. Also a good argument when your boss insists that YOU should use HIS favorite shell.

Chapter 1. What is Linux?

We will start with an overview of how Linux became the operating system it is today. We will discuss past and future development and take a closer look at the advantages and disadvantages of this system. We will talk about distributions, about Open Source in general and try to explain a little something about GNU.

This chapter answers questions like:

What is Linux?

Where and how did Linux start?

Isn't Linux that system where everything is done in text mode?

Does Linux have a future or is it just hype?

What are the advantages of using Linux?

What are the disadvantages?

What kinds of Linux are there and how do I choose the one that fits me?

What are the Open Source and GNU movements?

1.1. History

1.1.1. UNIX

In order to understand the popularity of Linux, we need to travel back in time, about 30 years ago...

Imagine computers as big as houses, even stadiums. While the sizes of those computers posed substantial problems, there was one thing that made this even worse: every computer had a different operating system. Software was always customized to serve a specific purpose, and software for one given system didn't run on another system. Being able to work with one system didn't automatically mean that you could work with another. It was difficult, both for the users and the system administrators.

Computers were extremely expensive then, and sacrifices had to be made even after the original purchase just to get the users to understand how they worked. The total cost per unit of computing power was enormous.

Technologically the world was not quite that advanced, so they had to live with the size for another decade. In 1969, a team of developers in the Bell Labs laboratories started working on a solution for the software problem, to address these compatibility issues. They developed a new operating system, which was

Simple and elegant.

Written in the C programming language instead of in assembly code.

Able to recycle code.

The Bell Labs developers named their project "UNIX."

The code recycling features were very important. Until then, all commercially available computer systems were written in a code specifically developed for one system. UNIX on the other hand needed only a small piece of that special code, which is now commonly named the kernel. This kernel is the only piece of code that needs to be adapted for every specific system and forms the base of the UNIX system. The operating system and all other functions were built around this kernel and written in a higher programming language, C. This language was especially developed for creating the UNIX system. Using this new technique, it was much easier to develop an operating system that could run on many different types of hardware.

The software vendors were quick to adapt, since they could sell ten times more software almost effortlessly. Weird new situations came in existence: imagine for instance computers from different vendors communicating in the same network, or users working on different systems without the need for extra education to use another computer. UNIX did a great deal to help users become compatible with different systems.

Throughout the next couple of decades the development of UNIX continued. More things became possible to do and more hardware and software vendors added support for UNIX to their products.

UNIX was initially found only in very large environments with mainframes and minicomputers (note that a PC is a "micro" computer). You had to work at a university, for the government or for large financial corporations in order to get your hands on a UNIX system.

But smaller computers were being developed, and by the end of the 80's, many people had home computers. By that time, there were several versions of UNIX available for the PC architecture, but none of them were truly free and more important: they were all terribly slow, so most people ran MS DOS or Windows 3.1 on their home PCs.

1.1.2. Linus and Linux

By the beginning of the 90s home PCs were finally powerful enough to run a full blown UNIX. Linus Torvalds, a young man studying computer science at the university of Helsinki, thought it would be a good idea to have some sort of freely available academic version of UNIX, and promptly started to code.

He started to ask questions, looking for answers and solutions that would help him get UNIX on his PC. Below is one of his first posts in comp.os.minix, dating from 1991:

From: torvalds@klaava.Helsinki.FI (Linus Benedict Torvalds) Newsgroups: comp.os.minix Subject: Gcc-1.40 and a posix-question Message-ID: <1991Jul3.100050.9886@klaava.Helsinki.FI> Date: 3 Jul 91 10:00:50 GMT Hello netlanders, Due to a project I'm working on (in minix), I'm interested in the posix standard definition. Could somebody please point me to a (preferably) machine-readable format of the latest posix rules? Ftp-sites would be nice. |

From the start, it was Linus' goal to have a free system that was completely compliant with the original UNIX. That is why he asked for POSIX standards, POSIX still being the standard for UNIX.

In those days plug-and-play wasn't invented yet, but so many people were interested in having a UNIX system of their own, that this was only a small obstacle. New drivers became available for all kinds of new hardware, at a continuously rising speed. Almost as soon as a new piece of hardware became available, someone bought it and submitted it to the Linux test, as the system was gradually being called, releasing more free code for an ever wider range of hardware. These coders didn't stop at their PC's; every piece of hardware they could find was useful for Linux.

Back then, those people were called "nerds" or "freaks", but it didn't matter to them, as long as the supported hardware list grew longer and longer. Thanks to these people, Linux is now not only ideal to run on new PC's, but is also the system of choice for old and exotic hardware that would be useless if Linux didn't exist.

Two years after Linus' post, there were 12000 Linux users. The project, popular with hobbyists, grew steadily, all the while staying within the bounds of the POSIX standard. All the features of UNIX were added over the next couple of years, resulting in the mature operating system Linux has become today. Linux is a full UNIX clone, fit for use on workstations as well as on middle-range and high-end servers. Today, a lot of the important players on the hard- and software market each have their team of Linux developers; at your local dealer's you can even buy pre-installed Linux systems with official support - eventhough there is still a lot of hard- and software that is not supported, too.

1.1.3. Current application of Linux systems

Today Linux has joined the desktop market. Linux developers concentrated on networking and services in the beginning, and office applications have been the last barrier to be taken down. We don't like to admit that Microsoft is ruling this market, so plenty of alternatives have been started over the last couple of years to make Linux an acceptable choice as a workstation, providing an easy user interface and MS compatible office applications like word processors, spreadsheets, presentations and the like.

On the server side, Linux is well-known as a stable and reliable platform, providing database and trading services for companies like Amazon, the well-known online bookshop, US Post Office, the German army and many others. Especially Internet providers and Internet service providers have grown fond of Linux as firewall, proxy- and web server, and you will find a Linux box within reach of every UNIX system administrator who appreciates a comfortable management station. Clusters of Linux machines are used in the creation of movies such as "Titanic", "Shrek" and others. In post offices, they are the nerve centers that route mail and in large search engine, clusters are used to perform internet searches.These are only a few of the thousands of heavy-duty jobs that Linux is performing day-to-day across the world.

It is also worth to note that modern Linux not only runs on workstations, mid- and high-end servers, but also on "gadgets" like PDA's, mobiles, a shipload of embedded applications and even on experimental wristwatches. This makes Linux the only operating system in the world covering such a wide range of hardware.

1.2. The user interface

1.2.1. Is Linux difficult?

Whether Linux is difficult to learn depends on the person you're asking. Experienced UNIX users will say no, because Linux is an ideal operating system for power-users and programmers, because it has been and is being developed by such people.

Everything a good programmer can wish for is available: compilers, libraries, development and debugging tools. These packages come with every standard Linux distribution. The C-compiler is included for free - as opposed to many UNIX distributions demanding licensing fees for this tool. All the documentation and manuals are there, and examples are often included to help you get started in no time. It feels like UNIX and switching between UNIX and Linux is a natural thing.

In the early days of Linux, being an expert was kind of required to start using the system. Those who mastered Linux felt better than the rest of the "lusers" who hadn't seen the light yet. It was common practice to tell a beginning user to "RTFM" (read the manuals). While the manuals were on every system, it was difficult to find the documentation, and even if someone did, explanations were in such technical terms that the new user became easily discouraged from learning the system.

The Linux-using community started to realize that if Linux was ever to be an important player on the operating system market, there had to be some serious changes in the accessibility of the system.

1.2.2. Linux for non-experienced users

Companies such as RedHat, SuSE and Mandriva have sprung up, providing packaged Linux distributions suitable for mass consumption. They integrated a great deal of graphical user interfaces (GUIs), developed by the community, in order to ease management of programs and services. As a Linux user today you have all the means of getting to know your system inside out, but it is no longer necessary to have that knowledge in order to make the system comply to your requests.

Nowadays you can log in graphically and start all required applications without even having to type a single character, while you still have the ability to access the core of the system if needed. Because of its structure, Linux allows a user to grow into the system: it equally fits new and experienced users. New users are not forced to do difficult things, while experienced users are not forced to work in the same way they did when they first started learning Linux.

While development in the service area continues, great things are being done for desktop users, generally considered as the group least likely to know how a system works. Developers of desktop applications are making incredible efforts to make the most beautiful desktops you've ever seen, or to make your Linux machine look just like your former MS Windows or an Apple workstation. The latest developments also include 3D acceleration support and support for USB devices, single-click updates of system and packages, and so on. Linux has these, and tries to present all available services in a logical form that ordinary people can understand. Below is a short list containing some great examples; these sites have a lot of screenshots that will give you a glimpse of what Linux on the desktop can be like:

1.3. Does Linux have a future?

1.3.1. Open Source

The idea behind Open Source software is rather simple: when programmers can read, distribute and change code, the code will mature. People can adapt it, fix it, debug it, and they can do it at a speed that dwarfs the performance of software developers at conventional companies. This software will be more flexible and of a better quality than software that has been developed using the conventional channels, because more people have tested it in more different conditions than the closed software developer ever can.

The Open Source initiative started to make this clear to the commercial world, and very slowly, commercial vendors are starting to see the point. While lots of academics and technical people have already been convinced for 20 years now that this is the way to go, commercial vendors needed applications like the Internet to make them realize they can profit from Open Source. Now Linux has grown past the stage where it was almost exclusively an academic system, useful only to a handful of people with a technical background. Now Linux provides more than the operating system: there is an entire infrastructure supporting the chain of effort of creating an operating system, of making and testing programs for it, of bringing everything to the users, of supplying maintenance, updates and support and customizations, etcetera. Today, Linux is ready to accept the challenge of a fast-changing world.

1.3.2. Ten years of experience at your service

While Linux is probably the most well-known Open Source initiative, there is another project that contributed enormously to the popularity of the Linux operating system. This project is called SAMBA, and its achievement is the reverse engineering of the Server Message Block (SMB)/Common Internet File System (CIFS) protocol used for file- and print-serving on PC-related machines, natively supported by MS Windows NT and OS/2, and Linux. Packages are now available for almost every system and provide interconnection solutions in mixed environments using MS Windows protocols: Windows-compatible (up to and includingWinXP) file- and print-servers.

Maybe even more successful than the SAMBA project is the Apache HTTP server project. The server runs on UNIX, Windows NT and many other operating systems. Originally known as "A PAtCHy server", based on existing code and a series of "patch files", the name for the matured code deserves to be connoted with the native American tribe of the Apache, well-known for their superior skills in warfare strategy and inexhaustible endurance. Apache has been shown to be substantially faster, more stable and more feature-full than many other web servers. Apache is run on sites that get millions of visitors per day, and while no official support is provided by the developers, the Apache user community provides answers to all your questions. Commercial support is now being provided by a number of third parties.

In the category of office applications, a choice of MS Office suite clones is available, ranging from partial to full implementations of the applications available on MS Windows workstations. These initiatives helped a great deal to make Linux acceptable for the desktop market, because the users don't need extra training to learn how to work with new systems. With the desktop comes the praise of the common users, and not only their praise, but also their specific requirements, which are growing more intricate and demanding by the day.

The Open Source community, consisting largely of people who have been contributing for over half a decade, assures Linux' position as an important player on the desktop market as well as in general IT application. Paid employees and volunteers alike are working diligently so that Linux can maintain a position in the market. The more users, the more questions. The Open Source community makes sure answers keep coming, and watches the quality of the answers with a suspicious eye, resulting in ever more stability and accessibility.

Listing all the available Linux software is beyond the scope of this guide, as there are tens of thousands of packages. Throughout this course we will present you with the most common packages, which are almost all freely available. In order to take away some of the fear of the beginning user, here's a screenshot of one of your most-wanted programs. You can see for yourself that no effort has been spared to make users who are switching from Windows feel at home:

1.4. Properties of Linux

1.4.1. Linux Pros

A lot of the advantages of Linux are a consequence of Linux' origins, deeply rooted in UNIX, except for the first advantage, of course:

Linux is free:

As in free beer, they say. If you want to spend absolutely nothing, you don't even have to pay the price of a CD. Linux can be downloaded in its entirety from the Internet completely for free. No registration fees, no costs per user, free updates, and freely available source code in case you want to change the behavior of your system.

Most of all, Linux is free as in free speech:

The license commonly used is the GNU Public License (GPL). The license says that anybody who may want to do so, has the right to change Linux and eventually to redistribute a changed version, on the one condition that the code is still available after redistribution. In practice, you are free to grab a kernel image, for instance to add support for teletransportation machines or time travel and sell your new code, as long as your customers can still have a copy of that code.

Linux is portable to any hardware platform:

A vendor who wants to sell a new type of computer and who doesn't know what kind of OS his new machine will run (say the CPU in your car or washing machine), can take a Linux kernel and make it work on his hardware, because documentation related to this activity is freely available.

Linux was made to keep on running:

As with UNIX, a Linux system expects to run without rebooting all the time. That is why a lot of tasks are being executed at night or scheduled automatically for other calm moments, resulting in higher availability during busier periods and a more balanced use of the hardware. This property allows for Linux to be applicable also in environments where people don't have the time or the possibility to control their systems night and day.

Linux is secure and versatile:

The security model used in Linux is based on the UNIX idea of security, which is known to be robust and of proven quality. But Linux is not only fit for use as a fort against enemy attacks from the Internet: it will adapt equally to other situations, utilizing the same high standards for security. Your development machine or control station will be as secure as your firewall.

Linux is scalable:

From a Palmtop with 2 MB of memory to a petabyte storage cluster with hundreds of nodes: add or remove the appropriate packages and Linux fits all. You don't need a supercomputer anymore, because you can use Linux to do big things using the building blocks provided with the system. If you want to do little things, such as making an operating system for an embedded processor or just recycling your old 486, Linux will do that as well.

The Linux OS and most Linux applications have very short debug-times:

Because Linux has been developed and tested by thousands of people, both errors and people to fix them are usually found rather quickly. It sometimes happens that there are only a couple of hours between discovery and fixing of a bug.

1.4.2. Linux Cons

There are far too many different distributions:

"Quot capites, tot rationes", as the Romans already said: the more people, the more opinions. At first glance, the amount of Linux distributions can be frightening, or ridiculous, depending on your point of view. But it also means that everyone will find what he or she needs. You don't need to be an expert to find a suitable release.

When asked, generally every Linux user will say that the best distribution is the specific version he is using. So which one should you choose? Don't worry too much about that: all releases contain more or less the same set of basic packages. On top of the basics, special third party software is added making, for example, TurboLinux more suitable for the small and medium enterprise, RedHat for servers and SuSE for workstations. However, the differences are likely to be very superficial. The best strategy is to test a couple of distributions; unfortunately not everybody has the time for this. Luckily, there is plenty of advice on the subject of choosing your Linux. A quick search on Google, using the keywords "choosing your distribution" brings up tens of links to good advise. The Installation HOWTO also discusses choosing your distribution.

Linux is not very user friendly and confusing for beginners:

It must be said that Linux, at least the core system, is less userfriendly to use than MS Windows and certainly more difficult than MacOS, but... In light of its popularity, considerable effort has been made to make Linux even easier to use, especially for new users. More information is being released daily, such as this guide, to help fill the gap for documentation available to users at all levels.

Is an Open Source product trustworthy?

How can something that is free also be reliable? Linux users have the choice whether to use Linux or not, which gives them an enormous advantage compared to users of proprietary software, who don't have that kind of freedom. After long periods of testing, most Linux users come to the conclusion that Linux is not only as good, but in many cases better and faster that the traditional solutions. If Linux were not trustworthy, it would have been long gone, never knowing the popularity it has now, with millions of users. Now users can influence their systems and share their remarks with the community, so the system gets better and better every day. It is a project that is never finished, that is true, but in an ever changing environment, Linux is also a project that continues to strive for perfection.

1.5. Linux Flavors

1.5.1. Linux and GNU

Although there are a large number of Linux implementations, you will find a lot of similarities in the different distributions, if only because every Linux machine is a box with building blocks that you may put together following your own needs and views. Installing the system is only the beginning of a longterm relationship. Just when you think you have a nice running system, Linux will stimulate your imagination and creativeness, and the more you realize what power the system can give you, the more you will try to redefine its limits.

Linux may appear different depending on the distribution, your hardware and personal taste, but the fundamentals on which all graphical and other interfaces are built, remain the same. The Linux system is based on GNU tools (Gnu's Not UNIX), which provide a set of standard ways to handle and use the system. All GNU tools are open source, so they can be installed on any system. Most distributions offer pre-compiled packages of most common tools, such as RPM packages on RedHat and Debian packages (also called deb or dpkg) on Debian, so you needn't be a programmer to install a package on your system. However, if you are and like doing things yourself, you will enjoy Linux all the better, since most distributions come with a complete set of development tools, allowing installation of new software purely from source code. This setup also allows you to install software even if it does not exist in a pre-packaged form suitable for your system.

A list of common GNU software:

Bash: The GNU shell

GCC: The GNU C Compiler

GDB: The GNU Debugger

Coreutils: a set of basic UNIX-style utilities, such as ls, cat and chmod

Findutils: to search and find files

Fontutils: to convert fonts from one format to another or make new fonts

The Gimp: GNU Image Manipulation Program

Gnome: the GNU desktop environment

Emacs: a very powerful editor

Ghostscript and Ghostview: interpreter and graphical frontend for PostScript files.

GNU Photo: software for interaction with digital cameras

Octave: a programming language, primarily intended to perform numerical computations and image processing.

GNU SQL: relational database system

Radius: a remote authentication and accounting server

...

Many commercial applications are available for Linux, and for more information about these packages we refer to their specific documentation. Throughout this guide we will only discuss freely available software, which comes (in most cases) with a GNU license.

To install missing or new packages, you will need some form of software management. The most common implementations include RPM and dpkg. RPM is the RedHat Package Manager, which is used on a variety of Linux systems, eventhough the name does not suggest this. Dpkg is the Debian package management system, which uses an interface called apt-get, that can manage RPM packages as well. Novell Ximian Red Carpet is a third party implementation of RPM with a graphical front-end. Other third party software vendors may have their own installation procedures, sometimes resembling the InstallShield and such, as known on MS Windows and other platforms. As you advance into Linux, you will likely get in touch with one or more of these programs.

1.5.2. GNU/Linux

The Linux kernel (the bones of your system, see Section 3.2.3.1) is not part of the GNU project but uses the same license as GNU software. A great majority of utilities and development tools (the meat of your system), which are not Linux-specific, are taken from the GNU project. Because any usable system must contain both the kernel and at least a minimal set of utilities, some people argue that such a system should be called a GNU/Linux system.

In order to obtain the highest possible degree of independence between distributions, this is the sort of Linux that we will discuss throughout this course. If we are not talking about a GNU/Linux system, the specific distribution, version or program name will be mentioned.

1.5.3. Which distribution should I install?

Prior to installation, the most important factor is your hardware. Since every Linux distribution contains the basic packages and can be built to meet almost any requirement (because they all use the Linux kernel), you only need to consider if the distribution will run on your hardware. LinuxPPC for example has been made to run on Apple and other PowerPCs and does not run on an ordinary x86 based PC. LinuxPPC does run on the new Macs, but you can't use it for some of the older ones with ancient bus technology. Another tricky case is Sun hardware, which could be an old SPARC CPU or a newer UltraSparc, both requiring different versions of Linux.

Some Linux distributions are optimized for certain processors, such as Athlon CPUs, while they will at the same time run decent enough on the standard 486, 586 and 686 Intel processors. Sometimes distributions for special CPUs are not as reliable, since they are tested by fewer people.

Most Linux distributions offer a set of programs for generic PCs with special packages containing optimized kernels for the x86 Intel based CPUs. These distributions are well-tested and maintained on a regular basis, focusing on reliant server implementation and easy installation and update procedures. Examples are Debian, Ubuntu, Fedora, SuSE and Mandriva, which are by far the most popular Linux systems and generally considered easy to handle for the beginning user, while not blocking professionals from getting the most out of their Linux machines. Linux also runs decently on laptops and middle-range servers. Drivers for new hardware are included only after extensive testing, which adds to the stability of a system.

While the standard desktop might be Gnome on one system, another might offer KDE by default. Generally, both Gnome and KDE are available for all major Linux distributions. Other window and desktop managers are available for more advanced users.

The standard installation process allows users to choose between different basic setups, such as a workstation, where all packages needed for everyday use and development are installed, or a server installation, where different network services can be selected. Expert users can install every combination of packages they want during the initial installation process.

The goal of this guide is to apply to all Linux distributions. For your own convenience, however, it is strongly advised that beginners stick to a mainstream distribution, supporting all common hardware and applications by default. The following are very good choices for novices:

Knoppix: an operating system that runs from your CD-ROM, you don't need to install anything.

Downloadable ISO-images can be obtained from LinuxISO.org. The main distributions can be purchased in any decent computer shop.

1.6. Summary

In this chapter, we learned that:

Linux is an implementation of UNIX.

The Linux operating system is written in the C programming language.

"De gustibus et coloribus non disputandum est": there's a Linux for everyone.

Linux uses GNU tools, a set of freely available standard tools for handling the operating system.

1.7. Exercises

A practical exercise for starters: install Linux on your PC. Read the installation manual for your distribution and/or the Installation HOWTO and do it.

| Read the docs! |

|---|---|

Most errors stem from not reading the information provided during the install. Reading the installation messages carefully is the first step on the road to success. |

Things you must know BEFORE starting a Linux installation:

Will this distribution run on my hardware?

Check with http://www.tldp.org/HOWTO/Hardware-HOWTO/index.html when in doubt about compatibility of your hardware.

What kind of keyboard do I have (number of keys, layout)? What kind of mouse (serial/parallel, number of buttons)? How many MB of RAM?

Will I install a basic workstation or a server, or will I need to select specific packages myself?

Will I install from my hard disk, from a CD-ROM, or using the network? Should I adapt the BIOS for any of this? Does the installation method require a boot disk?

Will Linux be the only system on this computer, or will it be a dual boot installation? Should I make a large partition in order to install virtual systems later on, or is this a virtual installation itself?

Is this computer in a network? What is its hostname, IP address? Are there any gateway servers or other important networked machines my box should communicate with?

Linux expects to be networked Not using the network or configuring it incorrectly may result in slow startup.

Is this computer a gateway/router/firewall? (If you have to think about this question, it probably isn't.)

Partitioning: let the installation program do it for you this time, we will discuss partitions in detail in Chapter 3. There is system-specific documentation available if you want to know everything about it. If your Linux distribution does not offer default partitioning, that probably means it is not suited for beginners.

Will this machine start up in text mode or in graphical mode?

Think of a good password for the administrator of this machine (root). Create a non-root user account (non-privileged access to the system).

Do I need a rescue disk? (recommended)

Which languages do I want?

The full checklist can be found at http://www.tldp.org/HOWTO/Installation-HOWTO/index.html.

In the following chapters we will find out if the installation has been successful.

Chapter 2. Quickstart

In order to get the most out of this guide, we will immediately start with a practical chapter on connecting to the Linux system and doing some basic things.

We will discuss:

Connecting to the system

Disconnecting from the system

Text and graphic mode

Changing your password

Navigating through the file system

Determining file type

Looking at text files

Finding help

2.1. Logging in, activating the user interface and logging out

2.1.1. Introduction

In order to work on a Linux system directly, you will need to provide a user name and password. You always need to authenticate to the system. As we already mentioned in the exercise from Chapter 1, most PC-based Linux systems have two basic modes for a system to run in: either quick and sober in text console mode, which looks like DOS with mouse, multitasking and multi-user features, or in graphical mode, which looks better but eats more system resources.

2.1.2. Graphical mode

This is the default nowadays on most desktop computers. You know you will connect to the system using graphical mode when you are first asked for your user name, and then, in a new window, to type your password.

To log in, make sure the mouse pointer is in the login window, provide your user name and password to the system and click or press Enter.

| Careful with that root account! |

|---|---|

It is generally considered a bad idea to connect (graphically) using the root user name, the system adminstrator's account, since the use of graphics includes running a lot of extra programs, in root's case with a lot of extra permissions. To keep all risks as low as possible, use a normal user account to connect graphically. But there are enough risks to keep this in mind as a general advice, for all use of the root account: only log in as root when extra privileges are required. |

After entering your user name/password combination, it can take a little while before the graphical environment is started, depending on the CPU speed of your computer, on the software you use and on your personal settings.

To continue, you will need to open a terminal window or xterm for short (X being the name for the underlying software supporting the graphical environment). This program can be found in the ->, or menu, depending on what window manager you are using. There might be icons that you can use as a shortcut to get an xterm window as well, and clicking the right mouse button on the desktop background will usually present you with a menu containing a terminal window application.

While browsing the menus, you will notice that a lot of things can be done without entering commands via the keyboard. For most users, the good old point-'n'-click method of dealing with the computer will do. But this guide is for future network and system administrators, who will need to meddle with the heart of the system. They need a stronger tool than a mouse to handle all the tasks they will face. This tool is the shell, and when in graphical mode, we activate our shell by opening a terminal window.

The terminal window is your control panel for the system. Almost everything that follows is done using this simple but powerful text tool. A terminal window should always show a command prompt when you open one. This terminal shows a standard prompt, which displays the user's login name, and the current working directory, represented by the twiddle (~):

Another common form for a prompt is this one:

[user@host dir] |

In the above example, user will be your login name, hosts the name of the machine you are working on, and dir an indication of your current location in the file system.

Later we will discuss prompts and their behavior in detail. For now, it suffices to know that prompts can display all kinds of information, but that they are not part of the commands you are giving to your system.

To disconnect from the system in graphical mode, you need to close all terminal windows and other applications. After that, hit the logout icon or find in the menu. Closing everything is not really necessary, and the system can do this for you, but session management might put all currently open applications back on your screen when you connect again, which takes longer and is not always the desired effect. However, this behavior is configurable.

When you see the login screen again, asking to enter user name and password, logout was successful.

| Gnome or KDE? |

|---|---|

We mentioned both the Gnome and KDE desktops already a couple of times. These are the two most popular ways of managing your desktop, although there are many, many others. Whatever desktop you chose to work with is fine - as long as you know how to open a terminal window. However, we will continue to refer to both Gnome and KDE for the most popular ways of achieving certain tasks. |

2.1.3. Text mode

You know you're in text mode when the whole screen is black, showing (in most cases white) characters. A text mode login screen typically shows some information about the machine you are working on, the name of the machine and a prompt waiting for you to log in:

RedHat Linux Release 8.0 (Psyche) blast login: _ |

The login is different from a graphical login, in that you have to hit the Enter key after providing your user name, because there are no buttons on the screen that you can click with the mouse. Then you should type your password, followed by another Enter. You won't see any indication that you are entering something, not even an asterisk, and you won't see the cursor move. But this is normal on Linux and is done for security reasons.

When the system has accepted you as a valid user, you may get some more information, called the message of the day, which can be anything. Additionally, it is popular on UNIX systems to display a fortune cookie, which contains some general wise or unwise (this is up to you) thoughts. After that, you will be given a shell, indicated with the same prompt that you would get in graphical mode.

| Don't log in as root |

|---|---|

Also in text mode: log in as root only to do setup and configuration that absolutely requires administrator privileges, such as adding users, installing software packages, and performing network and other system configuration. Once you are finished, immediately leave the special account and resume your work as a non-privileged user. Alternatively, some systems, like Ubuntu, force you to use sudo, so that you do not need direct access to the administrative account. |

Logging out is done by entering the logout command, followed by Enter. You are successfully disconnected from the system when you see the login screen again.

| The power button |

|---|---|

While Linux was not meant to be shut off without application of the proper procedures for halting the system, hitting the power button is equivalent to starting those procedures on newer systems. However, powering off an old system without going through the halting process might cause severe damage! If you want to be sure, always use the option when you log out from the graphical interface, or, when on the login screen (where you have to give your user name and password) look around for a shutdown button. |

Now that we know how to connect to and disconnect from the system, we're ready for our first commands.

2.2. Absolute basics

2.2.1. The commands

These are the quickies, which we need to get started; we will discuss them later in more detail.

Table 2-1. Quickstart commands

| Command | Meaning |

|---|---|

| ls | Displays a list of files in the current working directory, like the dir command in DOS |

| cd directory | change directories |

| passwd | change the password for the current user |

| file filename | display file type of file with name filename |

| cat textfile | throws content of textfile on the screen |

| pwd | display present working directory |

| exit or logout | leave this session |

| man command | read man pages on command |

| info command | read Info pages on command |

| apropos string | search the whatis database for strings |

2.2.2. General remarks

You type these commands after the prompt, in a terminal window in graphical mode or in text mode, followed by Enter.

Commands can be issued by themselves, such as ls. A command behaves different when you specify an option, usually preceded with a dash (-), as in ls -a. The same option character may have a different meaning for another command. GNU programs take long options, preceded by two dashes (--), like ls --all. Some commands have no options.

The argument(s) to a command are specifications for the object(s) on which you want the command to take effect. An example is ls /etc, where the directory /etc is the argument to the ls command. This indicates that you want to see the content of that directory, instead of the default, which would be the content of the current directory, obtained by just typing ls followed by Enter. Some commands require arguments, sometimes arguments are optional.

You can find out whether a command takes options and arguments, and which ones are valid, by checking the online help for that command, see Section 2.3.

In Linux, like in UNIX, directories are separated using forward slashes, like the ones used in web addresses (URLs). We will discuss directory structure in-depth later.

The symbols . and .. have special meaning when directories are concerned. We will try to find out about those during the exercises, and more in the next chapter.

Try to avoid logging in with or using the system administrator's account, root. Besides doing your normal work, most tasks, including checking the system, collecting information etc., can be executed using a normal user account with no special permissions at all. If needed, for instance when creating a new user or installing new software, the preferred way of obtaining root access is by switching user IDs, see Section 3.2.1 for an example.

Almost all commands in this book can be executed without system administrator privileges. In most cases, when issuing a command or starting a program as a non-privileged user, the system will warn you or prompt you for the root password when root access is required. Once you're done, leave the application or session that gives you root privileges immediately.

Reading documentation should become your second nature. Especially in the beginning, it is important to read system documentation, manuals for basic commands, HOWTOs and so on. Since the amount of documentation is so enormous, it is impossible to include all related documentation. This book will try to guide you to the most appropriate documentation on every subject discussed, in order to stimulate the habit of reading the man pages.

2.2.3. Using Bash features

Several special key combinations allow you to do things easier and faster with the GNU shell, Bash, which is the default on almost any Linux system, see Section 3.2.3.2. Below is a list of the most commonly used features; you are strongly suggested to make a habit out of using them, so as to get the most out of your Linux experience from the very beginning.

Table 2-2. Key combinations in Bash

| Key or key combination | Function |

|---|---|

| Ctrl+A | Move cursor to the beginning of the command line. |

| Ctrl+C | End a running program and return the prompt, see Chapter 4. |

| Ctrl+D | Log out of the current shell session, equal to typing exit or logout. |

| Ctrl+E | Move cursor to the end of the command line. |

| Ctrl+H | Generate backspace character. |

| Ctrl+L | Clear this terminal. |

| Ctrl+R | Search command history, see Section 3.3.3.4. |

| Ctrl+Z | Suspend a program, see Chapter 4. |

| ArrowLeft and ArrowRight | Move the cursor one place to the left or right on the command line, so that you can insert characters at other places than just at the beginning and the end. |

| ArrowUp and ArrowDown | Browse history. Go to the line that you want to repeat, edit details if necessary, and press Enter to save time. |

| Shift+PageUp and Shift+PageDown | Browse terminal buffer (to see text that has "scrolled off" the screen). |

| Tab | Command or filename completion; when multiple choices are possible, the system will either signal with an audio or visual bell, or, if too many choices are possible, ask you if you want to see them all. |

| Tab Tab | Shows file or command completion possibilities. |

The last two items in the above table may need some extra explanations. For instance, if you want to change into the directory directory_with_a_very_long_name, you are not going to type that very long name, no. You just type on the command line cd dir, then you press Tab and the shell completes the name for you, if no other files are starting with the same three characters. Of course, if there are no other items starting with "d", then you might just as wel type cd d and then Tab. If more than one file starts with the same characters, the shell will signal this to you, upon which you can hit Tab twice with short interval, and the shell presents the choices you have:

your_prompt> cd st starthere stuff stuffit |

In the above example, if you type "a" after the first two characters and hit Tab again, no other possibilities are left, and the shell completes the directory name, without you having to type the string "rthere":

your_prompt> cd starthere |

Of course, you'll still have to hit Enter to accept this choice.

In the same example, if you type "u", and then hit Tab, the shell will add the "ff" for you, but then it protests again, because multiple choices are possible. If you type Tab Tab again, you'll see the choices; if you type one or more characters that make the choice unambiguous to the system, and Tab again, or Enter when you've reach the end of the file name that you want to choose, the shell completes the file name and changes you into that directory - if indeed it is a directory name.

This works for all file names that are arguments to commands.

The same goes for command name completion. Typing ls and then hitting the Tab key twice, lists all the commands in your PATH (see Section 3.2.1) that start with these two characters:

your_prompt> ls ls lsdev lspci lsraid lsw lsattr lsmod lspgpot lss16toppm lsb_release lsof lspnp lsusb |

2.3. Getting help

2.3.1. Be warned

GNU/Linux is all about becoming more self-reliant. And as usual with this system, there are several ways to achieve the goal. A common way of getting help is finding someone who knows, and however patient and peace-loving the Linux-using community will be, almost everybody will expect you to have tried one or more of the methods in this section before asking them, and the ways in which this viewpoint is expressed may be rather harsh if you prove not to have followed this basic rule.

2.3.2. The man pages

A lot of beginning users fear the man (manual) pages, because they are an overwhelming source of documentation. They are, however, very structured, as you will see from the example below on: man man.

Reading man pages is usually done in a terminal window when in graphical mode, or just in text mode if you prefer it. Type the command like this at the prompt, followed by Enter:

yourname@yourcomp ~> man man |

The documentation for man will be displayed on your screen after you press Enter:

man(1) man(1) NAME man - format and display the on-line manual pages manpath - determine user's search path for man pages SYNOPSIS man [-acdfFhkKtwW] [--path] [-m system] [-p string] [-C config_file] [-M pathlist] [-P pager] [-S section_list] [section] name ... DESCRIPTION man formats and displays the on-line manual pages. If you specify section, man only looks in that section of the manual. name is normally the name of the manual page, which is typically the name of a command, function, or file. However, if name contains a slash (/) then man interprets it as a file specification, so that you can do man ./foo.5 or even man /cd/foo/bar.1.gz. See below for a description of where man looks for the manual page files. OPTIONS -C config_file lines 1-27 |

Browse to the next page using the space bar. You can go back to the previous page using the b-key. When you reach the end, man will usually quit and you get the prompt back. Type q if you want to leave the man page before reaching the end, or if the viewer does not quit automatically at the end of the page.

| Pagers |

|---|---|

The available key combinations for manipulating the man pages depend on the pager used in your distribution. Most distributions use less to view the man pages and to scroll around. See Section 3.3.4.2 for more info on pagers. |

Each man page usually contains a couple of standard sections, as we can see from the man man example:

The first line contains the name of the command you are reading about, and the id of the section in which this man page is located. The man pages are ordered in chapters. Commands are likely to have multiple man pages, for example the man page from the user section, the man page from the system admin section, and the man page from the programmer section.

The name of the command and a short description are given, which is used for building an index of the man pages. You can look for any given search string in this index using the apropos command.

The synopsis of the command provides a technical notation of all the options and/or arguments this command can take. You can think of an option as a way of executing the command. The argument is what you execute it on. Some commands have no options or no arguments. Optional options and arguments are put in between "[" and "]" to indicate that they can be left out.

A longer description of the command is given.

Options with their descriptions are listed. Options can usually be combined. If not so, this section will tell you about it.

Environment describes the shell variables that influence the behavior of this command (not all commands have this).

Sometimes sections specific to this command are provided.

A reference to other man pages is given in the "SEE ALSO" section. In between parentheses is the number of the man page section in which to find this command. Experienced users often switch to the "SEE ALSO" part using the / command followed by the search string SEE and press Enter.

Usually there is also information about known bugs (anomalies) and where to report new bugs you may find.

There might also be author and copyright information.

Some commands have multiple man pages. For instance, the passwd command has a man page in section 1 and another in section 5. By default, the man page with the lowest number is shown. If you want to see another section than the default, specify it after the man command:

man 5 passwd

If you want to see all man pages about a command, one after the other, use the -a to man:

man -a passwd

This way, when you reach the end of the first man page and press SPACE again, the man page from the next section will be displayed.

2.3.3. More info

2.3.3.1. The Info pages

In addition to the man pages, you can read the Info pages about a command, using the info command. These usually contain more recent information and are somewhat easier to use. The man pages for some commands refer to the Info pages.

Get started by typing info info in a terminal window:

File: info.info, Node: Top, Next: Getting Started, Up: (dir) Info: An Introduction ********************* Info is a program, which you are using now, for reading documentation of computer programs. The GNU Project distributes most of its on-line manuals in the Info format, so you need a program called "Info reader" to read the manuals. One of such programs you are using now. If you are new to Info and want to learn how to use it, type the command `h' now. It brings you to a programmed instruction sequence. To learn advanced Info commands, type `n' twice. This brings you to `Info for Experts', skipping over the `Getting Started' chapter. * Menu: * Getting Started:: Getting started using an Info reader. * Advanced Info:: Advanced commands within Info. * Creating an Info File:: How to make your own Info file. --zz-Info: (info.info.gz)Top, 24 lines --Top------------------------------- Welcome to Info version 4.2. Type C-h for help, m for menu item. |

Use the arrow keys to browse through the text and move the cursor on a line starting with an asterisk, containing the keyword about which you want info, then hit Enter. Use the P and N keys to go to the previous or next subject. The space bar will move you one page further, no matter whether this starts a new subject or an Info page for another command. Use Q to quit. The info program has more information.

2.3.3.2. The whatis and apropos commands

A short index of explanations for commands is available using the whatis command, like in the examples below:

[your_prompt] whatis ls ls (1) - list directory contents |

This displays short information about a command, and the first section in the collection of man pages that contains an appropriate page.

If you don't know where to get started and which man page to read, apropos gives more information. Say that you don't know how to start a browser, then you could enter the following command:

another prompt> apropos browser Galeon [galeon](1) - gecko-based GNOME web browser lynx (1) - a general purpose distributed information browser for the World Wide Web ncftp (1) - Browser program for the File Transfer Protocol opera (1) - a graphical web browser pilot (1) - simple file system browser in the style of the Pine Composer pinfo (1) - curses based lynx-style info browser pinfo [pman] (1) - curses based lynx-style info browser viewres (1x) - graphical class browser for Xt |

After pressing Enter you will see that a lot of browser related stuff is on your machine: not only web browsers, but also file and FTP browsers, and browsers for documentation. If you have development packages installed, you may also have the accompanying man pages dealing with writing programs having to do with browsers. Generally, a command with a man page in section one, so one marked with "(1)", is suitable for trying out as a user. The user who issued the above apropos might consequently try to start the commands galeon, lynx or opera, since these clearly have to do with browsing the world wide web.

2.3.3.3. The --help option

Most GNU commands support the --help, which gives a short explanation about how to use the command and a list of available options. Below is the output of this option with the cat command:

userprompt@host: cat --help

Usage: cat [OPTION] [FILE]...

Concatenate FILE(s), or standard input, to standard output.

-A, --show-all equivalent to -vET

-b, --number-nonblank number nonblank output lines

-e equivalent to -vE

-E, --show-ends display $ at end of each line

-n, --number number all output lines

-s, --squeeze-blank never more than one single blank line

-t equivalent to -vT

-T, --show-tabs display TAB characters as ^I

-u (ignored)

-v, --show-nonprinting use ^ and M- notation,

except for LFD and TAB

--help display this help and exit

--version output version information and exit

With no FILE, or when FILE is -, read standard input.

Report bugs to <bug-textutils@gnu.org>.

|

2.3.3.4. Graphical help

Don't despair if you prefer a graphical user interface. Konqueror, the default KDE file manager, provides painless and colourful access to the man and Info pages. You may want to try "info:info" in the Location address bar, and you will get a browsable Info page about the info command. Similarly, "man:ls" will present you with the man page for the ls command. You even get command name completion: you will see the man pages for all the commands starting with "ls" in a scroll-down menu. Entering "info:/dir" in the address location toolbar displays all the Info pages, arranged in utility categories. Excellent content, including the Konqueror Handbook. Start up from the menu or by typing the command konqueror in a terminal window, followed by Enter; see the screenshot below.

The Gnome Help Browser is very user friendly as well. You can start it selecting -> from the Gnome menu, by clicking the lifeguard icon on your desktop or by entering the command gnome-help in a terminal window. The system documentation and man pages are easily browsable with a plain interface.

The nautilus file manager provides a searchable index of the man and Info pages, they are easily browsable and interlinked. Nautilus is started from the command line, or clicking your home directory icon, or from the Gnome menu.

The big advantage of GUIs for system documentation is that all information is completely interlinked, so you can click through in the "SEE ALSO" sections and wherever links to other man pages appear, and thus browse and acquire knowledge without interruption for hours at the time.

2.3.3.5. Exceptions

Some commands don't have separate documentation, because they are part of another command. cd, exit, logout and pwd are such exceptions. They are part of your shell program and are called shell built-in commands. For information about these, refer to the man or info page of your shell. Most beginning Linux users have a Bash shell. See Section 3.2.3.2 for more about shells.

If you have been changing your original system configuration, it might also be possible that man pages are still there, but not visible because your shell environment has changed. In that case, you will need to check the MANPATH variable. How to do this is explained in Section 7.2.1.2.

Some programs or packages only have a set of instructions or references in the directory /usr/share/doc. See Section 3.3.4 to display.

In the worst case, you may have removed the documentation from your system by accident (hopefully by accident, because it is a very bad idea to do this on purpose). In that case, first try to make sure that there is really nothing appropriate left using a search tool, read on in Section 3.3.3. If so, you may have to re-install the package that contains the command to which the documentation applied, see Section 7.5.

2.4. Summary

Linux traditionally operates in text mode or in graphical mode. Since CPU power and RAM are not the cost anymore these days, every Linux user can afford to work in graphical mode and will usually do so. This does not mean that you don't have to know about text mode: we will work in the text environment throughout this course, using a terminal window.

Linux encourages its users to acquire knowledge and to become independent. Inevitably, you will have to read a lot of documentation to achieve that goal; that is why, as you will notice, we refer to extra documentation for almost every command, tool and problem listed in this book. The more docs you read, the easier it will become and the faster you will leaf through manuals. Make reading documentation a habit as soon as possible. When you don't know the answer to a problem, refering to the documentation should become a second nature.

We already learned some commands:

Table 2-3. New commands in chapter 2: Basics

| Command | Meaning |

|---|---|

| apropos | Search information about a command or subject. |

| cat | Show content of one or more files. |

| cd | Change into another directory. |

| exit | Leave a shell session. |

| file | Get information about the content of a file. |

| info | Read Info pages about a command. |

| logout | Leave a shell session. |

| ls | List directory content. |

| man | Read manual pages of a command. |

| passwd | Change your password. |

| pwd | Display the current working directory. |

2.5. Exercises

Most of what we learn is by making mistakes and by seeing how things can go wrong. These exercises are made to get you to read some error messages. The order in which you do these exercises is important.

Don't forget to use the Bash features on the command line: try to do the exercises typing as few characters as possible!

2.5.1. Connecting and disconnecting

Determine whether you are working in text or in graphical mode.

I am working in text/graphical mode. (cross out what's not applicable)

Log in with the user name and password you made for yourself during the installation.

Log out.

Log in again, using a non-existent user name

-> What happens?

2.5.2. Passwords

Log in again with your user name and password.

Change your password into P6p3.aa! and hit the Enter key.

-> What happens?

Try again, this time enter a password that is ridiculously easy, like 123 or aaa.

-> What happens?

Try again, this time don't enter a password but just hit the Enter key.

-> What happens?

Try the command psswd instead of passwd

-> What happens?

| New password |

|---|---|

Unless you change your password back again to what it was before this exercise, it will be "P6p3.aa!". Change your password after this exercise! Note that some systems might not allow to recycle passwords, i.e. restore the original one within a certain amount of time or a certain amount of password changes, or both. |

2.5.3. Directories

These are some exercises to help you get the feel.

Enter the command cd blah

-> What happens?

Enter the command cd ..

Mind the space between "cd" and ".."! Use the pwd command.

-> What happens?

List the directory contents with the ls command.

-> What do you see?

-> What do you think these are?

-> Check using the pwd command.

Enter the cd command.

-> What happens?

Repeat step 2 two times.